Is ARM Ethos-U55 the 'UNO' of TinyML Revolution?

Is ARM Ethos-U55 the 'UNO' of TinyML Revolution?

Remember the first time you uploaded a sketch to your Arduino UNO, watched that LED blink, and felt like you’d just invented the arc reactor? That moment wasn’t just about blinking lights — it was about igniting a lifelong passion for building and innovating.

In the fast-evolving world of Tiny ML (Machine Learning on Microcontrollers), ARM Ethos-U55 is a total game-changer. Unlike traditional processors or even regular microcontrollers, Ethos-U55 brings dedicated AI acceleration to the table — with super low power consumption.

Up to 480× more ML performance and 90% less energy usage compared to traditional Cortex-M cores — all in a compact form factor built for ultra-low-power devices.

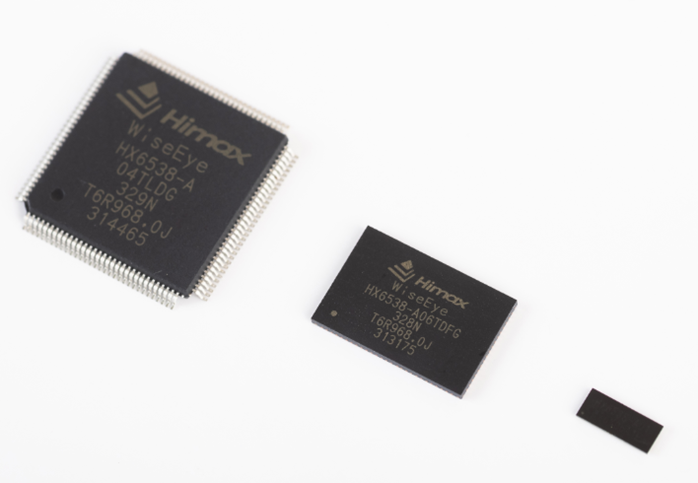

Interestingly, the Ethos-U55 is already powering real-world chips like the WiseEye2 AI Processor (WE2), bringing next-gen AI to the tiniest smart devices.

In this blog, let’s explore:

- What exactly is Ethos-U55?

- Why it stands out from other processors

- The cutting-edge technologies that make it powerful

What is ARM Ethos-U55?

Ethos-U55 is a microNPU (Micro Neural Processing Unit) developed by ARM for resource-constrained embedded systems, like Cortex-M class devices.

It acts as a co-processor to your main CPU and accelerates AI/ML workloads such as:

- Object detection

- Voice recognition

- Sensor data processing

It allows running complex ML models where traditional MCUs would struggle — without draining battery or needing bulky chips.

Why is Ethos-U55 Different from Other Processors?

Here’s the real secret sauce that makes Ethos-U55 a standout:

1. Based on ARM Helium Technology (MVE - M-Profile Vector Extension)

- 128-bit SIMD (Single Instruction, Multiple Data) capabilities

- Parallel ML operation processing

- Higher throughput without increasing frequency

→ Small MCUs now punch way above their weight.

2. 256 MAC Engine (Multiply-Accumulate Units)

- 256 MAC operations per cycle

- MAC is the backbone of most ML tasks (especially CNNs)

→ Lightning-fast AI with microcontroller-class power usage.

3. Deep CMSIS-NN Integration

- Optimized for ARM’s CMSIS-NN library

- Supports Conv2D, pooling, activation, etc.

- No need to rebuild models

→ Plug-and-play acceleration for your current ML models.

4. MicroNPU Driver for Easy Integration

- Manages model loading

- Efficient operation scheduling

- Works with TensorFlow Lite Micro

→ Easy to integrate into any embedded AI stack.

5. Vela Compiler: The Secret Weapon

- ARM’s ML compiler for Ethos-U55

- Handles:

Quantization (to int8)Operator fusionMemory tiling & optimization

→ Large models now fit inside SRAM and run fast.

6. MicroNPU Optimizer Inside Vela

- Fuses layers

- Optimizes memory layout

- Reduces compute overhead

→ Max AI performance in ultra-tiny power envelopes.

Summary

| Traditional MCU | Ethos-U55 Combo |

|---|---|

| Good for general tasks | Good for general + AI |

| Struggles with ML | Handles ML with ease |

| No MAC engine | 256 MACs per cycle |

| High power for ML | Ultra-low power |

That’s Why…

Ethos-U55 isn’t just a processor.

It’s a complete AI acceleration system, perfectly built for the Tiny ML revolution — where battery life, size, and compute efficiency are everything.

References

- ARM Ethos-U55 Product Overview

- CMSIS-NN GitHub Repository

- Vela Compiler Documentation

- WiseEye2 AI Processor (WE2)

- Grove Vision AI Module V2

Final Takeaway

If you’re working with embedded ML, the Ethos-U55 should absolutely be on your radar.

It’s not just about shrinking AI models — it’s about redefining what’s possible on the edge.